Build a Smart RAGent Chatbot: Combine RAG and ReAct Agent with Tools in One Powerful App

🤖 Building AI applications is no longer just for big tech giants. With the right tools and a bit of guidance, anyone can craft smart, useful systems that tackle real-world problems with ease. This blog post is a practical, hands-on guide to building one such system — a chatbot that blends document understanding with intelligent tool-based reasoning.

Meet RAGent, a smart assistant that brings together the power of Retrieval-Augmented Generation (RAG) and the ReAct Agent framework. But it's not just another chatbot. RAGent can read and answer questions from your uploaded documents — whether it's a PDF, Excel file, or Word doc — and if needed, it knows when to search the web, use a calculator, or check the weather to get the right answer.

🧠 Think of it as your intelligent co-pilot — one that knows when to read, when to think, and when to act.

If you're curious to see it in action, you can try the app live on Hugging Face Spaces. And if you want to dive into the code and build it yourself, everything is open-sourced on GitHub.

This guide will walk you through the process — not just how to build it, but how to make it smart, useful, and ready for real-world tasks. Let's get started! 🚀

📚 Table of Contents

- 📘 Introduction

- 📌 Project Overview

- 🗂️ RAGent Project Structure

- ⚙️ Setting Up the Environment

- 🧠 Implementing the LLM

- 📜 Understanding the Prompts

- 🔗 Building the RAG Pipeline

- 📦 Retrieval with Qdrant

- 🧭 Designing the ReAct Agent

- ReAct Loop: Thought → Action → Observation → Final Answer

- Prompt Format in Agent Prompt

- Agent Workflow

- 🛠️ Developing Custom Tools

- 🧵 Memory Management

- 💬 Gradio Web UI

- File Upload Interface

- Chat Logic Interface

- Tool Responses and Chat History

- Try Live RAGent App: GitHub & Hugging Face

- 🎯 Conclusion

📘 1. Introduction

Modern users expect more from AI chatbots. It's no longer enough to just generate clever text — they want assistants that understand uploaded files, fetch live information from the web, calculate results, and even explain complex topics — all in one smooth conversation.

This is exactly what RAGent delivers — a smart hybrid chatbot built by combining Retrieval-Augmented Generation (RAG) with the powerful reasoning flow of a ReAct Agent. In this blog post, we'll walk through how to build such a system from scratch and show you how it thinks, retrieves, and acts to serve intelligent answers.

🤖 What is RAGent Chatbot?

RAGent is a thinking assistant that goes far beyond static search. It allows users to upload files — such as PDFs, Excel sheets, Word documents, CSVs, and JSON — and ask natural language questions about the content. If the answer can't be found in the documents, it automatically knows how to switch gears: it can search the web, calculate math, check the weather, or even summarize using a general-purpose LLM.

The chatbot operates using a simple yet powerful loop:

Thought → Action → Observation → Final Answer

This loop makes it capable of step-by-step reasoning — much like how a human assistant would think through a task before responding.

🧠 Why Combine RAG and ReAct Agent?

RAG is perfect when the answer exists in a document. But when the answer isn't there — RAG can't help. Meanwhile, ReAct-style agents are great at using tools to reason and fetch live information, but they don't directly process user-uploaded files. When you combine both, magic happens.

RAGent intelligently chooses between:

- 📄 Reading and searching your uploaded documents

- 🌐 Searching the internet when documents fall short

- ➗ Performing real-time calculations

- 🌦️ Fetching up-to-date weather data

- 🧠 Using an LLM to summarize or explain information

All of this is done automatically — based on the question you ask — without you needing to specify the method. It's an elegant mix of retrieval, logic, and action that feels truly intelligent.

📌 2. Project Overview

Before jumping into the code, let's pause and explore what we're about to build. This section outlines the core capabilities of the RAGent Chatbot, the types of files it supports, and how the entire system flows from start to finish. Getting this big-picture view helps us appreciate how all the components work together like clockwork. 🕰️

🔑 2.1 Key Features

What makes RAGent special? It's not just another chatbot. Here's what sets it apart:

- 🧠 Understands Documents: Upload a file and ask anything — RAGent can summarize, extract insights, or fetch exact data from your documents.

- 🧰 Uses Tools When Needed: If the document doesn't contain the answer, it intelligently switches to tools like 🌐 Web Search, 📚 Wikipedia, ☁️ Weather, ➗ Calculator, or 📝 LLM-based helpers.

- 🔄 Thinks Step-by-Step: Follows a thoughtful reasoning loop:

Thought → Action → Observation → Final Answer - 🔗 Powered by Gemini LLM: Uses Google's Gemini model to generate smart and fluent responses.

- 💻 Runs on a Clean Web UI: Built using Gradio, so everything works seamlessly in the browser.

📂 2.2 Supported File Types

RAGent can work with a variety of document formats — so whether you're a researcher, a student, or a data analyst, uploading your files is quick and simple:

- 📄 PDF (.pdf)

- 📝 Word (.docx)

- 📊 Excel (.xlsx)

- 📈 PowerPoint (.pptx)

- 📎 CSV (.csv)

- 🧾 JSON (.json)

- 📃 Plain Text (.txt)

Once uploaded, the content is automatically chunked and embedded into a vector database, so it's searchable and context-aware during conversations.

🔄 2.3 Workflow Summary

Let's walk through how RAGent operates behind the scenes:

- 📤 Upload documents via the Gradio interface

- 🧩 Documents are chunked and embedded using a vector model

- 📦 Chunks are stored in Qdrant, a fast and scalable vector database

- ❓ Ask your question in natural language

- 🔍 The RAG system tries to retrieve the answer from the uploaded chunks

- 🔄 If RAG fails, the ReAct Agent triggers a relevant tool (like a web search or calculator)

- ✅ The final answer is presented, along with the reasoning that led to it

This hybrid pipeline ensures that no matter the query — whether it's document-based or external, you always get the best possible answer. 📈

🗂️ 3. RAGent Project Structure

To keep things clean, modular, and scalable, the RAGent Chatbot is carefully structured into folders and modules — each handling a specific part of the system. This section breaks down the project layout so you can see how everything fits together behind the scenes.

📁 3.1 High-Level Directory Overview

Here's a snapshot of the entire project structure:

ragent_chatbot/

│

├── app.py

├── agent.py

├── rag.py

├── config.py

├── tool_registry.py

├── requirements.txt

├── README.md

├── .env

│

├── retriever/

│ ├── __init__.py

│ └── qdrant_retriever.py

│

├── tools/

│ ├── __init__.py

│ ├── base_tool.py

│ ├── calculator_tool.py

│ ├── llm_tool.py

│ ├── rag_tool.py

│ ├── weather_tool.py

│ ├── web_search_tool.py

│ └── wikipedia_tool.py

│

├── vector_db/

│ ├── __init__.py

│ ├── chunker.py

│ ├── data_embedder.py

│ └── qdrant_db.py

│

├── prompts/

│ ├── agent_prompt.txt

│ └── rag_prompt.txt

│

├── llm/

│ ├── __init__.py

│ └── gemini_llm.py

│

├── memory/

│ ├── __init__.py

│ └── chat_memory.py

│

├── data/

├── doc_chunks/

├── questions/

│ └── test_questions.txt

├── nltk_words/

│

├── utils/

│ ├── __init__.py

│ ├── html_template.py

│ ├── nltk.py

│ └── normalizer.py

│

├── figure/

├── icons/

Each folder and file plays a dedicated role. Let's explore them more closely.

📦 3.2 Purpose of Key Folders and Files

The RAGent project is structured to be clear, modular, and easy to maintain. Below is a detailed breakdown of all the files and folders, grouped by their purpose. This will help you navigate the codebase and understand the role of each component.

📄 Root-Level Files

- app.py — The main entry point for the Gradio web UI. It manages file uploads, user queries, and connects the frontend to the agent logic.

- agent.py — Contains the core reasoning logic based on the ReAct framework. It decides whether to answer from documents or invoke a tool.

- rag.py — Coordinates the RAG pipeline, including document chunking, embedding, and retrieving relevant context.

- config.py — Stores configuration variables like model names, API keys, and file paths — centralized for easy updates.

- tool_registry.py — Dynamically discovers and registers all available tools from the tools/ folder, enabling plug-and-play extensibility.

- requirements.txt — Lists all Python dependencies needed to install and run the app properly.

- README.md — Project documentation that explains how to install, configure, and use the chatbot system.

- .env — Stores private credentials, API keys, and model configuration values that shouldn't be hardcoded.

📁 Files within Folders

- __init__.py — Initializes the retriever module.

- qdrant_retriever.py — Performs similarity search using vector embeddings, including hybrid BM25-based queries from Qdrant.

- base_tool.py — Defines the abstract base class for tools. All tools inherit from this.

- calculator_tool.py — Solves mathematical expressions parsed from user queries.

- llm_tool.py — Leverages Gemini LLM for summarization, rewriting, and general instructions.

- rag_tool.py — Interfaces with the RAG pipeline to answer document-based questions.

- weather_tool.py — Fetches current weather information using location-based queries.

- web_search_tool.py — Sends real-time search queries to a web search engine.

- wikipedia_tool.py — Retrieves short definitions and summaries from Wikipedia.

- chunker.py — Splits long document text into digestible chunks that are embedding-ready.

- data_embedder.py — Converts text chunks into dense vector representations using embedding models.

- qdrant_db.py — Handles storing and querying chunk embeddings in the Qdrant vector database.

- agent_prompt.txt — Defines the exact format of reasoning: Thought → Action → Observation → Final Answer.

- rag_prompt.txt — Provides clear instructions for answering questions using retrieved document context.

- gemini_llm.py — Integrates Gemini model APIs for both chat and embedding functionality.

- chat_memory.py — Maintains chat history using LangChain-compatible message formats.

- test_questions.txt — Sample QA pairs for evaluating the system's responses.

- html_template.py — Generates styled UI responses using markdown and HTML.

- nltk.py — Tokenizes and processes text using NLTK tools.

- normalizer.py — Cleans and standardizes raw document content before chunking.

By organizing the project in this modular way, development becomes easier, debugging becomes faster, and scaling becomes much more manageable. Every component has a clear job — and all of them work together like a well-coordinated team. 🧩

⚙️ 4. Setting Up the Environment

Before running the RAGent Chatbot, it's important to set up a clean and well-configured development environment. This step ensures all dependencies are correctly installed, environment variables are securely managed, and the system is ready to run the app smoothly.

We recommend using Python 3.12 for compatibility and performance. Make sure it's installed on your machine before proceeding. You can use pyenv or your OS's package manager to manage versions.

📦 4.1 Installing Dependencies

All required Python packages for the chatbot are listed in the requirements.txt file. It includes everything from document loaders and vector DB clients to UI libraries and language model integrations.

To install them all at once, run the following command in your terminal:

pip install -r requirements.txt

Here's a snapshot of what's inside the file:

streamlit==1.46.1

langchain==0.3.26

langchain-community==0.3.26

langchain-google-genai==2.1.5

qdrant-client==1.14.3

pdfplumber==0.11.7

unstructured==0.18.3

python-docx==1.2.0

python-pptx==1.0.2

openpyxl==3.1.5

jq==1.9.1

python-dotenv==1.1.1

sentence-transformers==4.1.0

transformers==4.53.0

tavily-python==0.7.9

wikipedia-api==0.8.1

nltk==3.9.1

numexpr==2.11.0

These libraries collectively enable:

- 📄 Parsing and reading documents like PDF, DOCX, PPTX, CSV, and Excel

- 🧠 Generating and handling embeddings

- 🔗 Integrating with Qdrant for vector storage

- 🧰 Using tools such as weather APIs, calculators, and LLMs

- 💻 Building the web UI with Streamlit

👉 Tip: It's highly recommended to install everything inside a virtual environment using venv or virtualenv to avoid conflicts with global packages.

🔐 4.2 Setting Up the .env File

The chatbot relies on several external APIs and configurations. To keep these secure and manageable, we use a .env file at the root level to store sensitive data such as API keys and cloud endpoints.

Create a new file named .env and add the following entries:

GOOGLE_API_KEY=your_gemini_api_key

OPENWEATHER_API_KEY=your_open_weather_api_key

TAVILY_API_KEY=your_tavily_search_api_key

QDRANT_URL=your_qdrant_cloud_url

QDRANT_API_KEY=your_qdrant_api_key

Here's a breakdown of what each key is used for:

- GOOGLE_API_KEY: Grants access to Google's Gemini LLM for chatting and embedding.

- OPENWEATHER_API_KEY: Used by the weather tool to fetch real-time forecasts.

- TAVILY_API_KEY: Enables fast and accurate web search results via the Tavily API.

- QDRANT_URL & QDRANT_API_KEY: Connect the app to your Qdrant cloud instance for vector storage and retrieval.

With python-dotenv already included in the dependencies, the app will automatically load these values at runtime without you needing to hardcode them anywhere.

🧠 5. Implementing the LLM

At the heart of RAGent is Google's Gemini — a powerful and reliable language model that drives intelligent conversations, structured reasoning, and contextual document answering. In this section, we'll look at how Gemini is configured and used differently in the agent and the RAG pipeline.

🧠 5.1 Google Gemini Overview

The file gemini_llm.py wraps around the Gemini LLM using LangChain's ChatGoogleGenerativeAI and makes it easy to access the model throughout the app.

Here's how it's implemented:

import os

from config import Config

from dotenv import load_dotenv

from langchain_core.messages import HumanMessage

from langchain_google_genai import ChatGoogleGenerativeAI

load_dotenv()

class GeminiLLM:

def __init__(self):

self.api_key = os.getenv("GOOGLE_API_KEY")

if not self.api_key:

raise ValueError("GOOGLE_API_KEY not found in environment variables")

self.model_name = Config.LLM_MODEL

self.temperature = Config.TEMPERATURE

self.gemini_client = self._initialize_client()

def _initialize_client(self):

return ChatGoogleGenerativeAI(

google_api_key=self.api_key,

model=self.model_name,

temperature=self.temperature

)

def get_client(self):

return self.gemini_client

This class ensures that Gemini is securely initialized and available using get_client(). You can also test it standalone like this:

if __name__ == "__main__":

gemini_llm = GeminiLLM()

llm = gemini_llm.get_client()

response = llm.invoke([HumanMessage(content="Explain LangChain in 5 sentences")])

print("### Gemini Response:\n", response.content)

🤖 5.2 How Gemini is Integrated into the Agent and RAG

Gemini plays two different roles in the system — as a reasoning engine for the agent and as a document-answering model in the RAG pipeline.

- 🔹 Gemini is initialized with a system prompt from agent_prompt.txt:

Thought → Action → Observation → Final Answer

This defines how the agent should think and respond. - 🔹 Tools are registered dynamically using ToolRegistry(). These include:

- 🌐 Web search

- 📚 Wikipedia

- 🌦️ Weather API

- ➗ Calculator

- 📝 LLM summarization

- 📦 RAG tool

- 🔹 A ReAct agent is created using: initialize_agent(...) with Gemini and the tool list

- 🔹 When running, the agent handles reasoning using: self.react_agent.invoke(messages)

- 📌 If parsing fails, it falls back to Gemini directly: self.llm.invoke(messages)

- 🔹 Gemini is initialized for direct question-answering on document chunks: self.llm = GeminiLLM().get_client()

- 🔹 A prompt from rag_prompt.txt is loaded to guide responses using only retrieved content.

- 🔹 A retrieval + QA chain is built:

self.chain = create_retrieval_chain(self.retriever, self.qa_chain) - 🔹 Final answer is returned and stored: response = self.chain.invoke(inputs)

- 🧠 Chat context is updated using MemoryManager.

While both use the same Gemini client, they follow different flows — one supports reasoning and tool invocation, the other focuses on contextual answering from documents. This dual setup is what gives RAGent its flexibility and intelligence. 🔄

📜 6. Understanding the Prompts

Prompts are the silent architects behind any LLM-based system. They shape how the model interprets the task, guides its reasoning, and decides what kind of answer to produce. In the RAGent system, two carefully written prompts control the entire experience — one dedicated to tool-driven reasoning, and the other to document-based answering.

Let's take a closer look at how each prompt works and how they contribute to making the chatbot smart, structured, and reliable.

🧠 6.1 Agent Prompt — Tool Reasoning Format

The agent_prompt.txt file defines the reasoning structure for the ReAct-style agent. It trains the LLM to think step-by-step instead of jumping straight to answers. This helps the agent behave more like an investigator — breaking down the problem, using tools when needed, and always justifying its decisions.

Here's the core format that the prompt enforces:

Thought: Explain what you are thinking.

Action: {"action": "tool_name", "action_input": "input string"}

Observation: Describe the result from the tool.

...

Final Answer: <your answer here>

🔍 Some key rules included in this prompt:

- Never guess — always rely on tools for facts

- Explain reasoning before and after using any tool

- Always try rag_search first for factual or document-related queries

- Use other tools like web_search, wikipedia, calculator only if RAG fails

- Actions must be formatted as single-line JSON — no code blocks or backticks

📚 Here's an example of how the reasoning cycle looks in action:

Thought: The question might be answered using documents.

Action: {"action": "rag_search", "action_input": "What is K12HSN?"}

Observation: K12HSN stands for K-12 High-Speed Network, a California education network.

Thought: I now know the answer.

Final Answer: K12HSN stands for K-12 High-Speed Network, a California initiative for education connectivity.

This prompt transforms the LLM into a logical, tool-using assistant that can explore and reason rather than respond blindly.

📄 6.2 RAG Prompt — Document Answering Logic

The rag_prompt.txt is used in the RAG pipeline. Its goal is simple but strict: only allow the LLM to answer based on the uploaded documents — no hallucination, no external assumptions.

The input to this prompt typically follows this structure:

Chat History:

{chat_history}

User Question:

{input}

Relevant Context:

{context}

🧷 The following rules are enforced to maintain accuracy:

- Use only the given context to answer

- Do not rely on external facts, even if they seem obvious

- Always write clearly and naturally

- If the answer isn't found, say:

"I don't know based on the document and our previous conversation."

📚 Imagine a user uploads a PDF about a school's budget and asks:

The system will:

- Retrieve relevant chunks about "IT" and "budget"

- Insert them into {context}

- Let Gemini answer strictly using that context

This approach ensures every response is grounded in facts and traceable to a document source — no guessing allowed.

Together, these two prompts — agent_prompt.txt and rag_prompt.txt — give RAGent its core superpowers: the ability to reason through tools and respond truthfully from documents. This is what makes it not just a chatbot, but a reliable AI assistant. 💡

🔗 7. Building the RAG Pipeline

The RAG pipeline — short for Retrieval-Augmented Generation — is what allows RAGent to understand and answer questions from user-uploaded documents. It forms the intelligent bridge between stored content and natural language queries.

This pipeline is built around three major components:

- ✂️ Chunking and embedding documents

- 🧠 Storing chunks in Qdrant DB

- 🔍 Retrieving and answering using Gemini

Let's dive into each of these steps in detail.

✂️ 7.1 Chunking and Embedding Documents

Since large documents can't be passed directly to an LLM, we first break them into overlapping chunks and embed each one as a dense vector for efficient retrieval later on.

The DocumentChunker class (in chunker.py) uses LangChain's splitter to divide text into overlapping pieces.

from langchain.text_splitter import RecursiveCharacterTextSplitter

self.splitter = RecursiveCharacterTextSplitter(

chunk_size=Config.CHUNK_SIZE,

chunk_overlap=Config.CHUNK_OVERLAP

)

- Chunk size defines the length of each text segment

- Overlap ensures continuity of context between adjacent chunks

To avoid duplicate processing (common across similar documents), each chunk is hashed:

chunk_hash = hashlib.md5(text.encode('utf-8')).hexdigest()

Once chunked, the text is converted into vector form using a sentence transformer model (like BAAI/bge-base-en-v1.5):

from sentence_transformers import SentenceTransformer

self.model = SentenceTransformer(Config.EMBEDDING_MODEL_NAME)

def embed_documents(self, texts: List[str]):

return self.model.encode(texts, convert_to_numpy=True).tolist()

Each chunk is now a list of float values — a semantic fingerprint of its content.

🧠 7.2 Storing Chunks in Qdrant DB

Once embedded, chunks are stored in Qdrant — a high-performance vector database. This allows fast and scalable semantic retrieval across large document sets.

Inside qdrant_db.py, the method load_and_chunk_docs() automatically selects the right loader based on file type:

if ext == ".pdf":

docs = PDFPlumberLoader(file_path).load()

elif ext == ".docx":

docs = UnstructuredWordDocumentLoader(file_path).load()

...

Supported formats include:

- 📄 PDF, Word, Excel, PowerPoint

- 📎 CSV, JSON (with dynamic schema detection using jq)

- 📃 Plain Text

Each chunk is also tokenized for keyword-based BM25 retrieval:

tokenized_text = self.tokenize_for_bm25(text)

Qdrant indexes these tokens using a dedicated payload field:

self.client.create_payload_index(

collection_name=self.collection_name,

field_name="tokenized_text",

field_schema=TextIndexParams(...)

)

Each chunk is inserted as a PointStruct, containing the vector, raw text, tokens, and metadata:

PointStruct(

id=chunk["id"],

vector=embeddings[i],

payload={

"text": text,

"tokenized_text": tokenized_text,

**chunk["metadata"]

}

)

To optimize performance, batches of points are uploaded at once:

for i in range(0, len(all_points), Config.BATCH_SIZE):

self.client.upsert(...)

🔍 7.3 Retrieval Logic in RAG

Once the vector database is populated, the RAG pipeline comes to life — retrieving relevant chunks and generating answers using Gemini.

Using MemoryManager, we load past messages to preserve context:

self.memory = MemoryManager()

history_messages = self.memory.get(session_id)

This supports follow-up questions like “What about next year?” by understanding prior exchanges.

The query is passed to QdrantRetriever() which performs both semantic and keyword search:

- 📐 Vector search → based on meaning

- 📃 BM25 search → based on keywords

Hybrid scoring merges both results for better relevance:

final_score = α * bm25_score + (1 - α) * vector_scoreThe relevant chunks are passed to Gemini using a structured prompt loaded from rag_prompt.txt:

self.prompt = ChatPromptTemplate.from_messages([

("system", "{chat_history}\n\n" + system_prompt),

("human", "{input}")

])

The chain is built and executed:

self.qa_chain = create_stuff_documents_chain(self.llm, self.prompt)

response = self.chain.invoke(inputs)

This ensures that Gemini answers only using context — no hallucinations, no external guessing.

To maintain context, both the query and response are saved:

self.memory.add(session_id, HumanMessage(content=query))

self.memory.add(session_id, AIMessage(content=answer))

This enables smooth, multi-turn conversations across multiple files — without losing track.

With the RAG pipeline in place, RAGent becomes a fully functional knowledge assistant that can extract accurate answers, understand natural language, and keep track of long conversations — all grounded in real data. 📄🔍

📦 8. Retrieval with Qdrant

Retrieval is the core engine of any Retrieval-Augmented Generation (RAG) system. It's what transforms a static knowledge base into a dynamic, searchable brain. In the case of RAGent, we use Qdrant, a powerful open-source vector database, to fetch relevant document chunks using a combination of semantic search (via dense vectors) and lexical keyword search (via BM25).

Let's explore exactly how this retrieval process is built, from document preprocessing to real-time query matching.

🗃️ 8.1 Qdrant Retriever and Hybrid Search

The main gateway between our app and Qdrant is the QdrantRetriever class. This class inherits from LangChain's BaseRetriever and serves as the plug-and-play retriever for any user query.

Here's the basic structure:

class QdrantRetriever(BaseRetriever):

def __init__(self):

super().__init__()

self._qdrant_client = QdrantDBClient()

self._k = Config.TOP_K

def _get_relevant_documents(self, input: str, *, config: Optional[RunnableConfig] = None) -> List[Document]:

return self._qdrant_client.search(query=input, top_k=self._k)

This retriever class delegates the real work to QdrantDBClient, which handles the entire lifecycle of document processing: from chunking and embedding to upserting and querying.

Before any document can be retrieved, it must be broken down into manageable pieces. We use DocumentChunker to divide large documents into overlapping chunks that preserve contextual continuity.

class DocumentChunker:

def __init__(self):

self.splitter = RecursiveCharacterTextSplitter(

chunk_size=Config.CHUNK_SIZE,

chunk_overlap=Config.CHUNK_OVERLAP

)

- 📏 Chunk size: Controls how much text each chunk contains

- 🔗 Overlap: Ensures context flows between chunks

- 🧼 Normalization: Removes noise and standardizes formatting

- 🧠 Deduplication: Each chunk is hashed to avoid storing repeated content

📄 Example chunk output:

{

"id": 123456789,

"text": "The IT budget for 2023 was increased by 30%.",

"metadata": {

"source": "financial_report.pdf",

"chunk_order": 5

}

}

Once chunked, each piece of text is converted into a dense vector using BAAIEmbedder, which wraps a sentence-transformer model from Hugging Face:

class BAAIEmbedder(Embeddings):

def embed_documents(self, texts: List[str]) -> List[List[float]]:

return self.model.encode(texts, convert_to_numpy=True).tolist()

These vectors capture the semantic meaning of the text and allow similarity comparisons during retrieval. We also tokenize the original text for keyword matching using BM25.

Chunks are inserted into Qdrant using PointStruct. Each entry includes the following:

- 📊 Vector: For semantic search

- 📝 Tokenized text: For keyword-based BM25 search

- 📦 Metadata: Source file, chunk order, etc.

self.client.upsert(

collection_name=self.collection_name,

points=[

PointStruct(

id=chunk["id"],

vector=embeddings[i],

payload={

"text": normalized_text,

"tokenized_text": bm25_tokens,

**chunk["metadata"]

}

)

]

)

Qdrant also supports:

- 🧮 Payload indexing using whitespace tokenizer

- 🎯 Filtering with FieldCondition queries

- ⚡ Fast batch uploads for scalability

🔎 8.2 How Chunks Are Retrieved from the Vector DB

Once the chunks are stored in Qdrant, they can be retrieved using a hybrid approach that combines semantic similarity and exact keyword match. This improves robustness and helps capture both context and specificity.

The first pass uses tokenized text to match keywords using Qdrant's scroll() method:

bm25_results = self.client.scroll(

scroll_filter=Filter(

should=[

FieldCondition(key="tokenized_text", match=MatchText(text=token))

for token in query_tokens

]

)

)

In parallel, we compute the embedding of the query and perform vector similarity search:

vector_results = self.client.query_points(

query=query_embedding,

limit=top_k,

with_payload=True

)

- Results from BM25 and vector search are merged

- Duplicates are removed using content hashes

- A final hybrid score is calculated using a tunable formula:

final_score = ALPHA * bm25_score + (1 - ALPHA) * vector_score

The top results are then wrapped as LangChain Document objects for use in downstream pipelines.

- 🔁 Deduplication: Prevents storing repeated content

- 📊 Excel row support: Converts spreadsheet rows into paragraphs for better QA

- 📤 Chunk export: Useful for reviewing or debugging stored content

- 🧹 DB clearing: Removes all stored vectors using clear_qdrant_db()

- 1️⃣ Load and normalize documents using the correct loader

- 2️⃣ Split into chunks using DocumentChunker

- 3️⃣ Embed each chunk using the BAAI model

- 4️⃣ Tokenize for BM25 and store all in Qdrant

- 5️⃣ On query, run both BM25 and vector search

- 6️⃣ Merge results, calculate scores, return top matches

This hybrid retrieval mechanism gives RAGent both depth and precision — it can understand nuanced questions and find the best answers across a wide range of documents, formats, and phrasings. 🧠📁

🧭 9. Designing the ReAct Agent

At the heart of RAGent lies the ReAct agent — a reasoning engine that decides how to respond to any user query. This agent doesn't just “answer” questions — it analyzes the type of query, determines the best course of action, and calls on the appropriate tools or documents before producing a response. In short, the ReAct agent is the thinking brain behind the chatbot's intelligence.

Whether it needs to fetch information from uploaded files, look something up online, run calculations, or simply generate a helpful reply, the ReAct agent handles all of it — step-by-step, logically, and transparently.

🔁 9.1 ReAct Loop: Thought → Action → Observation → Final Answer

The agent's reasoning is based on a deliberate format known as the ReAct loop — short for Reasoning and Acting. This format forces the model to explain what it's doing at each step before reaching a final answer. This not only improves accuracy but also makes the chatbot's logic visible and verifiable.

Here's the core structure:

Thought: What am I trying to do?

Action: {"action": "tool_name", "action_input": "user query"}

Observation: What did the tool return?

...

Final Answer:

This format ensures that the model does not jump to conclusions or hallucinate responses. It uses actual tool outputs and walks through the logic before producing a final answer.

📚 Example:

Thought: The question asks for a document-based fact.

Action: {"action": "rag_search", "action_input": "What is K12HSN?"}

Observation: K12HSN refers to the California K-12 High-Speed Network.

Thought: This seems sufficient to answer the question.

Final Answer: K12HSN refers to the California K-12 High-Speed Network.

If the first tool doesn't help, the agent can continue the loop and try another tool. This chaining capability allows for layered and dynamic reasoning — a big leap beyond static question answering.

🧾 9.2 Prompt Format in agent_prompt.txt

This ReAct structure is strictly enforced by a carefully crafted prompt file: agent_prompt.txt. This file contains instructions that tell the language model exactly how to behave, format outputs, and select tools.

Here are some of the important rules defined in the prompt:

- 🧠 Always begin with a Thought

- 🧰 Use tools using valid JSON:

{"action": "wikipedia", "action_input": "LangChain"} - ⛔ Never answer immediately after an Observation

- 📦 Always try rag_search first for factual or document-based questions

- 🔁 Fall back to tools such as:

- 🌐 web_search

- 📚 wikipedia

- ☀️ weather

- ➗ calculator

- ✍️ llm_instruction (for rewriting, summarizing, or explanation)

- 🙅♂️ Never guess — respond only with observed or retrieved data

- 🛑 If no tool works, reply:

Final Answer: I couldn't find enough information.

This prompt enforces discipline, ensuring the model behaves like a careful analyst instead of a speculative chatbot.

🧠 9.3 Agent Workflow

Let's now break down how the ReAct agent is actually implemented inside the Agent class — the true entry point for every user query in RAGent.

First, we load the prompt content and configure Gemini with it as a system message:

prompt_content = self.load_prompt(Config.AGENT_PROMPT)

system_prompt = SystemMessage(content=prompt_content)

self.llm = GeminiLLM().get_client().with_config({

"system_message": system_prompt

})

All tools — like rag_search, web_search, weather — are loaded dynamically via a tool registry:

registry = ToolRegistry()

tools = registry.get_all_tools()

Now we combine the LLM and tools into a ReAct-style agent using LangChain's built-in initializer:

self.react_agent = initialize_agent(

tools=tools,

llm=self.llm,

agent=AgentType.CHAT_ZERO_SHOT_REACT_DESCRIPTION,

verbose=True,

handle_parsing_errors=True

)

Finally, we pass the query and chat history into the agent and let it reason:

def run(self, query: str, history: list[BaseMessage] = None) -> str:

messages = history.copy() if history else []

messages.append(HumanMessage(content=query))

return self.react_agent.invoke(messages)

💡 Key idea: This is the first stop for any user query. The agent decides which tool to use, how to use it, and what to return — all based on logic, not guesswork.

Let's look at how real user queries are processed through this loop:

Example 1: Weather Lookup

User: "What is the current temperature in Tokyo?"

Thought: This is a weather-related question.

Action: {"action": "weather", "action_input": "Tokyo"}

Observation: 26°C, partly cloudy.

Final Answer: It's currently 26°C and partly cloudy in Tokyo.

Example 2: Document-Based Answer

User: "How much was the IT budget in 2023?"

Thought: This should be found in the uploaded documents.

Action: {"action": "rag_search", "action_input": "IT budget in 2023"}

Observation: The IT budget for 2023 was $1.2 million.

Final Answer: The IT budget in 2023 was $1.2 million.

By following this structure, RAGent becomes a truly intelligent assistant — not just a chatbot. It can think, explain, explore multiple options, and always back its answers with verifiable logic.

🛠️ 10. Developing Custom Tools

What gives RAGent its power and flexibility isn't just the LLM or document retrieval — it's the suite of custom-built tools the agent can call on. These tools extend the assistant's abilities beyond static answers, allowing it to search the web, calculate numbers, fetch definitions, summarize input, and much more — all based on what the user asks.

In this section, we'll dive into how these tools are structured, how they interact with the agent, and how they're discovered and used dynamically at runtime.

🧱 10.1 BaseTool Architecture

Every tool in RAGent is built on top of a shared foundation: the BaseTool class. This abstract class defines the standard interface and expected behavior for all tools, ensuring that the ReAct agent can treat them interchangeably.

Here's what it looks like:

class BaseTool(ABC):

def __init__(self, name: str, description: str):

self._name = name.lower()

self._description = description

@abstractmethod

def run(self, query: str) -> str:

pass

🛠️ Every tool must implement:

- name: A unique identifier used by the agent (e.g., "calculator")

- description: A brief explanation used by the LLM to decide when to call the tool

- run(): The method that actually performs the tool's task and returns a response

This makes tools pluggable and reusable across the system.

🔎 10.2 Overview of Tools

Let's explore the specific tools implemented in RAGent, what they do, and when they are used by the agent.

This tool runs the document retrieval pipeline using Qdrant and Gemini.

- Used for: Questions related to uploaded documents

- Backed by: RAGPipeline (retriever + LLM)

- Example: “What is the IT budget in 2023?” → calls rag_search

class RAGTool(BaseTool):

def __init__(self):

super().__init__(

name="rag_search",

description="Searches internal documents using vector database."

)

self.rag = RAGPipeline()

Connects to the Tavily API to retrieve real-time data from the internet.

- Used for: Current events, news, updates outside of the uploaded content

- Example: “Who won the F1 World Championship in 2024?”

class WebSearchTool(BaseTool):

def __init__(self):

super().__init__(

name="web_search",

description="Finds up-to-date info from the web using Tavily API."

)

self.api_key = os.getenv("TAVILY_API_KEY")

self.tavily_client = TavilyClient(api_key=self.api_key)

Queries Wikipedia for definitions and general knowledge.

- Used for: Well-known concepts or topics

- Example: “What is quantum computing?”

class WikipediaTool(BaseTool):

def __init__(self):

super().__init__(

name="wikipedia",

description="Fetches definitions and general knowledge from Wikipedia."

)

self.wiki_api = wikipediaapi.Wikipedia(user_agent="chatbot_user")

Uses the OpenWeather API to retrieve current weather data for a city.

- Used for: Questions about current weather + location

- Example: “What's the weather in Tokyo today?”

class WeatherTool(BaseTool):

def __init__(self):

super().__init__(

name="weather",

description="Provides current weather for a given city."

)

self.api_key = os.getenv("OPENWEATHER_API_KEY")

self.base_url = "http://api.openweathermap.org/data/2.5/weather"

Safely evaluates math expressions using numexpr.

- Used for: Simple or advanced numeric questions

- Example: “What is 2 to the power of 5?”

class CalculatorTool(BaseTool):

def __init__(self):

super().__init__(

name="calculator",

description="Evaluates math like '2**5', 'pi * 2**2', etc."

)

self.local_dict = {"pi": math.pi, "e": math.e}

This tool uses Gemini to perform creative and instructional tasks that don't involve searching documents or the web.

- Used for: Summarization, rewriting, storytelling, tone changes

- Example: “Rewrite this: Hey there, thanks for your help!”

class LLMInstructionTool(BaseTool):

def __init__(self):

super().__init__(

name="llm_instruction",

description="Handles summarization, rewriting, storytelling, and more."

)

self.llm = ChatGoogleGenerativeAI(

google_api_key=os.environ["GOOGLE_API_KEY"],

model=Config.LLM_MODEL,

temperature=Config.TEMPERATURE

)

🧩 10.3 Registering Tools Dynamically

Rather than hardcoding all tools, RAGent uses dynamic discovery via the ToolRegistry class. This lets you plug in new tools by simply dropping them into the tools/ folder — no extra wiring required.

Here's the logic behind it:

def register_tools(self):

"""Dynamically registers all available tools in the tools package."""

tool_modules = [name for _, name, _ in pkgutil.iter_modules([self.tools_package])]

for module_name in tool_modules:

try:

module = importlib.import_module(f"{self.tools_package}.{module_name}")

for attr_name in dir(module):

attr = getattr(module, attr_name)

if (

isinstance(attr, type)

and issubclass(attr, BaseTool)

and attr is not BaseTool

):

tool_instance = attr()

self.tools[tool_instance.name.lower()] = tool_instance

except Exception as e:

print(f"[ERROR] Failed to register tool '{module_name}': {e}")

- 🧩 Scans the entire tools/ folder

- 📦 Loads any class that inherits from BaseTool

- 🚀 Registers the tool automatically — no need to import manually

This system makes it easy to scale the assistant's capabilities by simply adding new tools.

Once registered, the tools are passed to the agent using:

tools = registry.get_all_tools()

Each tool is added to the agent as:

Tool(name, description, func)When the user submits a query, the agent chooses the right tool based on its description and the ReAct reasoning format.

User: “What's the full form of K12HSN and the weather in Tokyo?”

- 🔍 Agent first uses rag_search → "K12HSN stands for K-12 High-Speed Network"

- 🌦️ Then it uses weather → "26°C, partly cloudy in Tokyo"

- ✅ Combines results into a final answer:

Final Answer:

K12HSN stands for K-12 High-Speed Network. The current weather in Tokyo is 26°C and partly cloudy.

This flexible tool architecture makes RAGent adaptable, intelligent, and capable of answering any query with precision and logic. 🧠🔧

🧵 11. Memory Management

One of the key traits of a truly helpful assistant is the ability to remember past conversations. In RAGent, we've built in-memory session tracking to enable follow-up questions, context retention, and smooth multi-turn interactions.

This capability is powered by a class called MemoryManager located in chat_memory.py. It ensures that every chat session has its own memory and that both the user's questions and the assistant's answers are stored in a structured way.

💾 11.1 Role of Chat Memory

The MemoryManager class is designed to manage multiple chat sessions simultaneously. Each session is identified by a session_id, and the memory for that session is a chronological list of messages exchanged between the user and the assistant.

Here's how the class is structured:

class MemoryManager:

def __init__(self):

self.sessions: Dict[str, List[BaseMessage]] = {}

def get(self, session_id: str = "default") -> List[BaseMessage]:

if session_id not in self.sessions:

self.sessions[session_id] = []

return self.sessions[session_id]

def add(self, session_id: str, message: BaseMessage):

if session_id not in self.sessions:

self.sessions[session_id] = []

self.sessions[session_id].append(message)

def clear(self, session_id: str = "default"):

self.sessions[session_id] = []

def list_sessions(self) -> List[str]:

return list(self.sessions.keys())

Internally, the memory is a simple dictionary that maps each session ID to a list of LangChain-compatible message types:

- HumanMessage — represents user input

- AIMessage — stores assistant replies

- SystemMessage — used for custom instructions or role prompts

📌 Example usage:

memory = MemoryManager()

memory.add("test1", HumanMessage(content="What's the weather today?"))

memory.add("test1", AIMessage(content="It's sunny in Tokyo."))

This makes your chatbot feel more like a real assistant — capable of holding long conversations, referring back to previous questions, and making responses feel coherent and continuous.

🧵 11.2 Keeping Chat Context Across Queries

Memory isn't useful unless it's actively integrated into the reasoning pipeline. In RAGent, memory is used throughout the RAGPipeline to give Gemini the context it needs to answer accurately in multi-turn scenarios.

Each time a query comes in, the memory system retrieves the conversation history for the session:

history_messages = self.memory.get(session_id)

chat_history_str = self.messages_to_string(history_messages)

The messages_to_string() method converts this history into a readable string for prompt injection:

user: What is K12HSN?

assistant: It stands for K-12 High-Speed Network.

user: What does the abbreviation stand for?

This formatted chat history is injected into Gemini's system prompt, ensuring the LLM has full context when generating the next answer:

ChatPromptTemplate.from_messages([

("system", "{chat_history}\n\n" + system_prompt),

("human", "{input}")

])

Once Gemini responds, the assistant saves both the user question and the AI answer back to memory:

self.memory.add(session_id, HumanMessage(content=query))

self.memory.add(session_id, AIMessage(content=answer))

This allows the assistant to stay in sync with the user across follow-up queries, even if they refer to things mentioned several messages earlier.

🧠 Example interaction:

- Q1: What is the full form of K12HSN?

- A1: K12HSN stands for K-12 High-Speed Network.

- Q2: What does the abbreviation stand for?

- A2: It stands for K-12 High-Speed Network.

By maintaining full chat history per session, RAGent becomes smarter and more natural — capable of drawing context from earlier interactions and providing coherent responses even in complex, branching conversations.

💬 12. Gradio Web UI

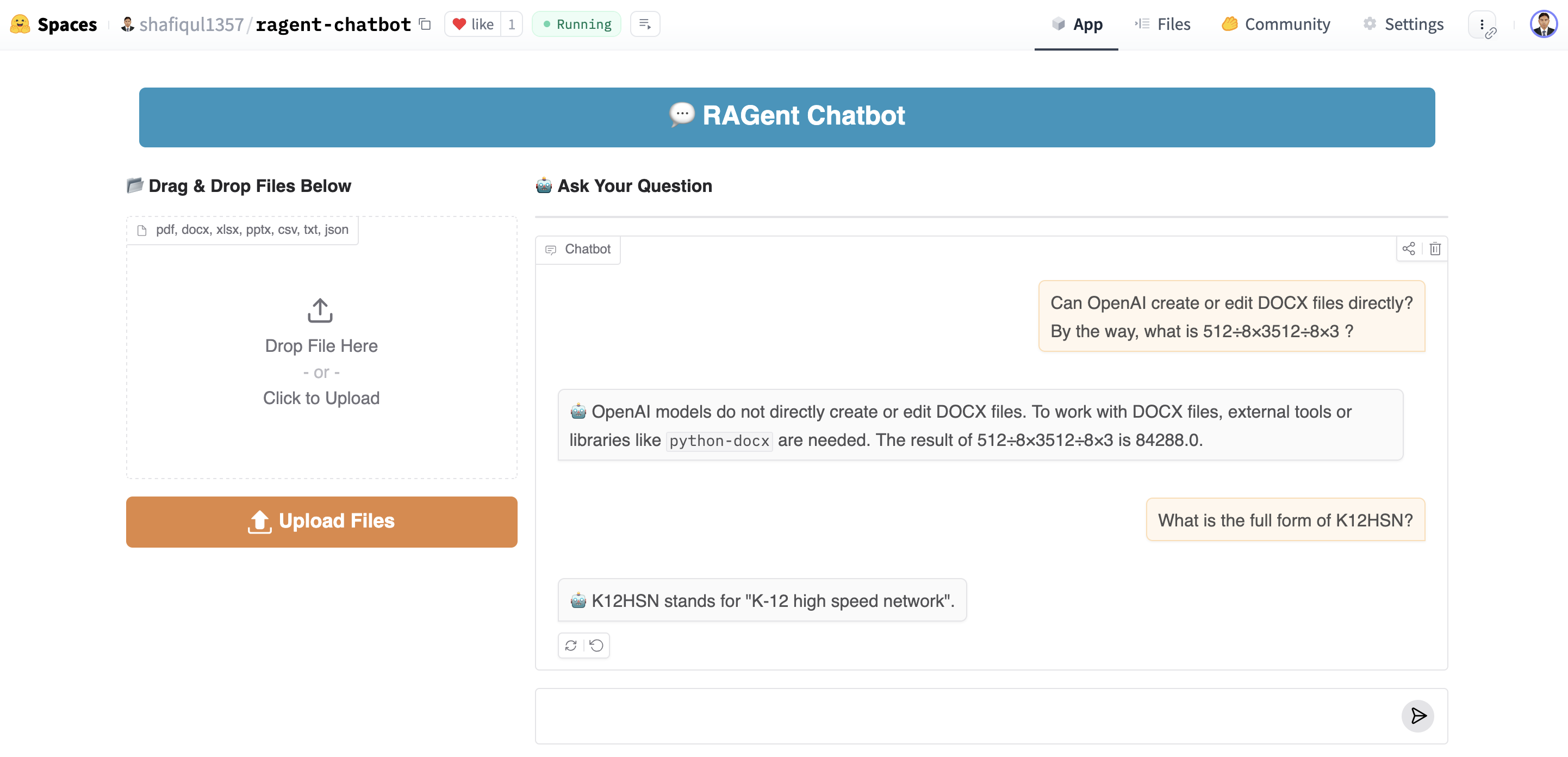

To make the RAGent Chatbot accessible and easy to use, we've built a clean, interactive interface using Gradio. The application is split into two major panels:

- 📂 Left Panel — File Upload: Upload documents like PDFs, Excel, Word, etc.

- 💬 Right Panel — Chat Interface: Ask natural language questions and get intelligent answers powered by RAG + tools.

Together, these panels form a seamless workflow where users can upload knowledge sources and query them instantly — all without any coding or configuration.

📂 12.1 File Upload Interface

The left panel allows users to upload one or more documents in the following supported formats:

- PDF: Standard document reports and articles

- DOCX: Microsoft Word files

- XLSX: Excel spreadsheets

- PPTX: Presentation slides

- CSV, TXT, JSON: Structured and unstructured text data

Once the user clicks the Upload Files button, the system processes each file in the background:

- 📄 File type detection and format-specific parsing

- ✂️ Content chunking using DocumentChunker

- 🧼 Text normalization and deduplication

- 🧠 Embedding each chunk using BAAIEmbedder

- 🧩 BM25 tokenization for hybrid search

- 📥 Final storage in QdrantDB with vector + text + metadata

The actual upload logic in app.py uses chained callbacks to show progress and handle errors gracefully:

self.upload_btn.click(

fn=self.clear_outputs,

inputs=[],

outputs=[self.progress_output, self.status_output]

).then(

fn=self.upload_and_process,

inputs=self.uploaded_files,

outputs=[self.progress_output, self.status_output],

show_progress="hidden"

)

✅ After successful upload, the UI confirms with:

3/3 file(s) processed and stored in DB!

🤖 12.2 Chat Interface Logic

The right panel is where the magic happens — the actual conversation with the RAGent assistant.

Behind the scenes, this chat interface calls the ReAct agent, which determines how to answer each question by combining reasoning and tool use:

response = self.agent.run(query, past_messages)

The decision flow works like this:

- 🔍 Step 1: Try rag_search to look up answers from uploaded documents via Qdrant

- 🌐 Step 2: If nothing relevant is found, try external tools:

- web_search for current events

- wikipedia for factual definitions

- weather for city-specific updates

- calculator for math problems

- 🧠 Step 3: As a last resort, use llm_instruction for summarization, rewriting, or creative tasks

All of this happens without the user needing to specify which tool to use — the agent decides based on the query type.

🧾 12.3 Tool Responses and Chat History

Every response from the assistant includes the final answer produced by one or more tools. In addition:

- 💾 All messages are saved in session memory using MemoryManager

- 🔁 Full chat history is preserved, so follow-up questions remain context-aware

self.memory.add(session_id, HumanMessage(content=query))

self.memory.add(session_id, AIMessage(content=answer))

🧪 Example Multi-Tool Query:

→ Agent uses: rag_search for salary + weather for city weather

→ Final Answer: Deborah Downs earns $72,600. The temperature in Tokyo is 25°C with light rain and 78% humidity.

🔗 12.4 Try Live RAGent App: GitHub & Hugging Face

Want to try RAGent in action or explore the code?

- 🧠 GitHub Repository:

👉 github.com/shafiqul-islam-sumon/ragent-chatbot - 🚀 Live Hugging Face App:

👉 huggingface.co/spaces/shafiqul1357/ragent-chatbot

With the live app, you can:

- 📄 Upload your own files

- 💬 Ask real-world questions

- 🧠 Watch the agent think and reason

- 🔧 See tools like weather, Wikipedia, and search in action

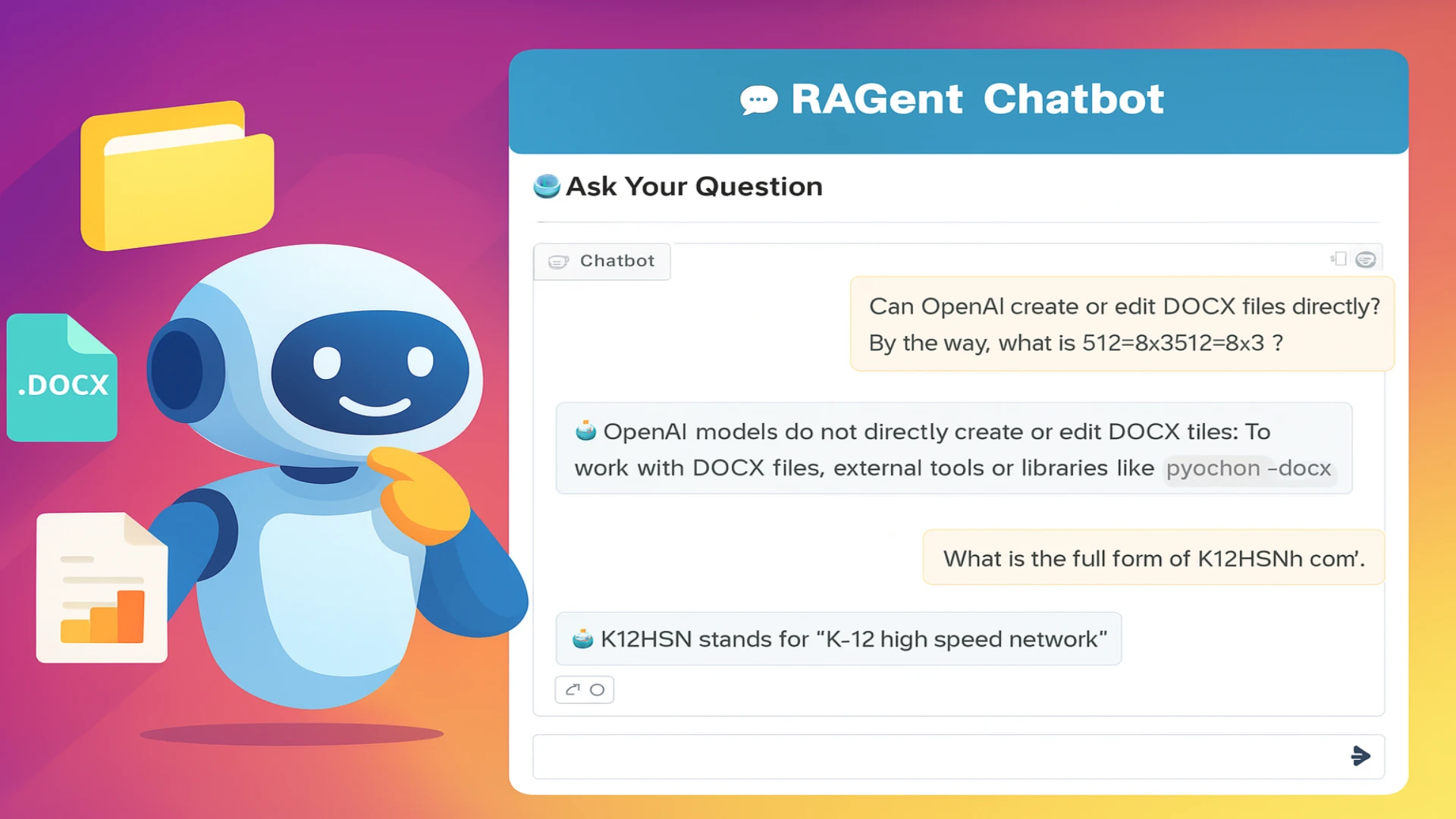

🖼️ App Preview:

Figure 1 : RAGent Chatbot UI

👆 This is what the interface looks like. Upload docs, ask questions, and let the agent do the thinking!

🎯 13. Conclusion

The RAGent Chatbot is more than just another AI chatbot — it's a full-fledged intelligent assistant built around a modular, explainable, and extensible architecture. By smartly combining several key technologies, RAGent delivers an experience that's both powerful and practical.

Here's a quick recap of what makes this system unique:

- 🔍 Retrieval-Augmented Generation (RAG): Enables accurate, document-grounded responses based on user-uploaded files

- 🛠️ Dynamic Tool Integration: Lets the agent solve a wide range of tasks — from weather to Wikipedia to math

- 🧠 LLM-Based Fallback: Handles open-ended, creative, or general queries when retrieval tools don't apply

- 💾 Chat Memory: Maintains context throughout the conversation, enabling natural, multi-turn interactions

- 🖥️ Gradio Web UI: Provides a clean and intuitive interface for users to upload documents and chat effortlessly

From answering factual queries to integrating external knowledge and using logical reasoning steps — everything is stitched together in one seamless loop. The system is highly customizable and can be extended in many ways:

- ➕ Add new tools — like SQL executor, code runner, or finance APIs

- 🔄 Swap in other models — use different embedding models or LLMs based on your budget or preference

- 📈 Boost accuracy — apply re-ranking or response rephrasing for even smarter outputs

- ☁️ Deploy anywhere — Hugging Face, Streamlit Cloud, or private servers for full control

🔮 In short: RAGent isn't just a chatbot — it's a template for intelligent, tool-augmented assistants. A blueprint for modular AI applications that are practical, explainable, and extensible.

Technical Stacks

Technical Stacks

-

Python

-

Gradio

-

Hugging Face

-

RAG

RAG

-

Google Gemini

-

Qdrant

-

LangChain

-

Prompt Engineering

Prompt Engineering

-

Wikipedia

-

Tavily API

Tavily API

-

OpenWeatherMap API

OpenWeatherMap API

Download Source Code :

RAGent Chatbot

Download Source Code :

RAGent Chatbot

📚 References

- 🔗 GitHub Repository: RAGent Chatbot

- 🤗 Live App in Hugging Face Space: RAGent Chatbot App

-

Python: Python Website

-

Gradio: Gradio Website

-

Medium Article: Read the Blog on Medium

-

Google Gemini: Try Gemini

-

LangChain: LangChain Website

-

Qdrant: Qdrant Website

Home

Home About

About